Natural-Language-based Robot Task Inference and Completion in Real-World, Complex Environments

Human control of technical systems can be particularly effective when the flexibility and combinatorial power of spoken language is leveraged. This interaction is severely compromised, however, when the control signals are corrupted and incomplete and deviate from the rich and intact language structure of healthy individuals. This may occur in elderly people, in patients with neurological disorders such as aphasia or in patients with cognitive disturbances such as dementia – groups of people who will in future rely especially on technical and robotic assistance systems to maintain mobility, independence and communication. Even in the absence of language pathology, control signals can be sensorily degraded or ambiguous with detrimental effects on task inference.

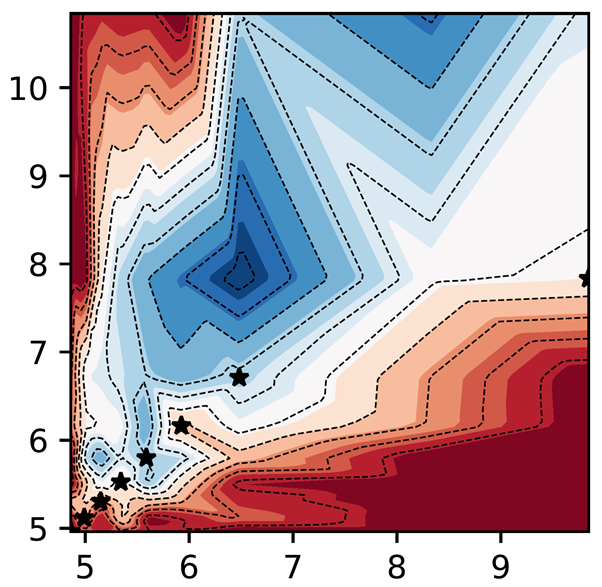

In this project, we seek to develop methods for robust and trustworthy control of technical systems by corrupted command inputs. In aphasic patients with severe language production disturbances, we will use state-of-the-art linguistically informed machine learning and Natural Language Processing (NLP) models to extract information and infer meaning from multimodal signals that go beyond speech output and include intracranial electrophysiological activity recorded in the language network as well as non-linguistic signals such as eye movements. By viewing human subjects as situated agents in real-world, complex environments, we aim to construct an integrated understanding system that is achieved by convergence of AI, cognitive neuroscience and robotics.

Understandable and Trustworthy Human-Robot Interaction for Intuitive Communication

Supervising PIs

Contributing PIs

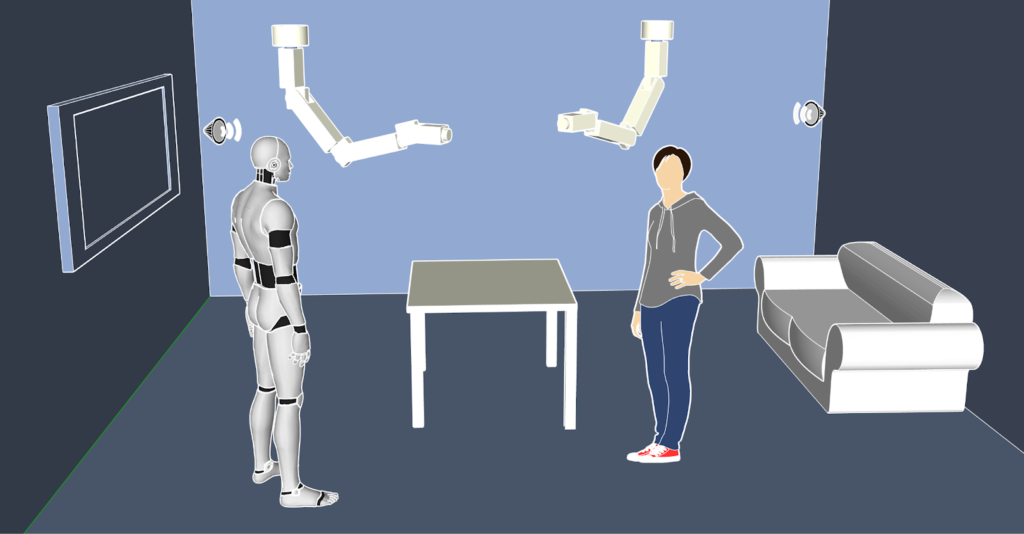

Interaction with next generation robotics will be very rich and will involve many modalities, such as speech, gesture, and various types of physical interaction. Since robots will inhabit the same physical spaces as humans, especially the embodiment and the physical aspects of interaction will become essential: How will robots have to behave around humans, what will be the expected social norms, can they be transferred from human interaction, or will they differ? What does it take for humans to understand, trust and feel comfortable in such spaces?