Uncertainty-Aware 3D Human Pose Estimation & Tracking in the Wild

Supervising PIs

Contributing PIs

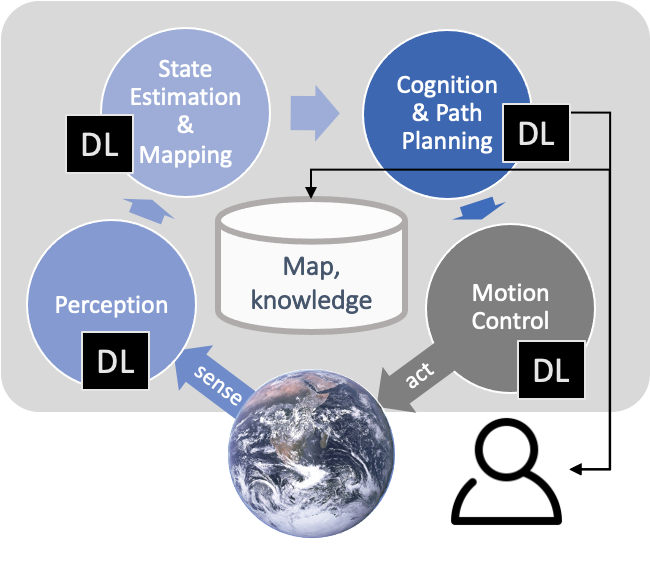

Explicit consideration of uncertainty has long played an important role throughout mobile robot estimation, navigation, and control. With the advent of deep learning, especially when integrated as building blocks into a complex, modular architecture of a robotic software stack in perception, estimation, navigation, and control, understanding uncertainties of these neural networks is proving essential to building robust, and fail-safe mobile robotic systems. At the same time, it is not trivial, especially to safely estimate model (epistemic) uncertainty; and many open research questions persist in terms of how to propagate uncertainties meaningfully to downstream tasks, e.g. from perception to estimation and/or control. In this project, we will thus explore neural network architectures that support uncertainty quantification, and also do so in a way that supports specific use cases of mobile robot operation in a task-specific manner.

Preference Learning for Human-Machine Interaction

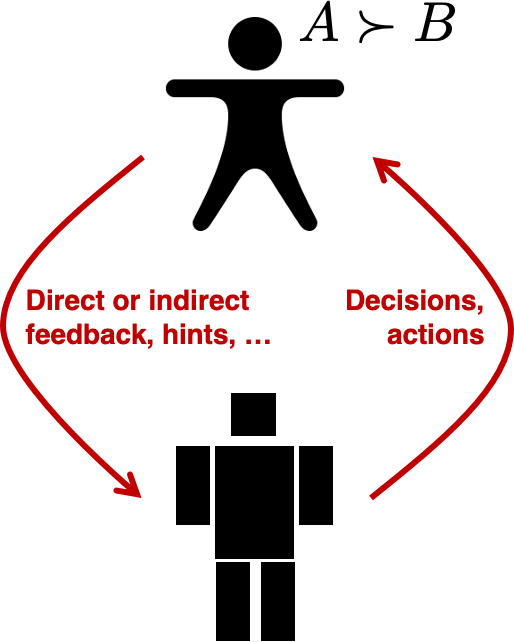

Machine learning (ML) is a key component of an AI methodology for human-centered robotics, being actively employed in control, cognition, interaction, as well as decision making. Specifically relevant in this regard is preference learning, which has developed itself as an independent branch of machine learning research within the recent past. Broadly speaking, preference learning deals with the learning of preference models from observed, revealed, or automatically extracted preference information, which is often expressed in an implicit rather than explicit way, and of qualitative rather than quantitative nature. Thus, by providing a methodological basis for personalization, preference learning is highly relevant for a human-centered approach to AI, which requires decisions or actions taken by a machine to be optimally adapted to the preferences and needs of a human in a given context.

In this project, we seek to develop preference learning methodology specifically tailored to human-centered robotics, which is challenging for various reasons, for example, because sought predictions are of complex nature (e.g., order relations), supervision is weak (inferred from hints, partial, noisy, …), and performance metrics are hard to optimize. Motivated by the goals of the overall initiative, we seek to further extend existing methodology in two directions. First, preference learning should be interactive: The basic idea is to improve service by letting the machine adapt to the preferences of the human, assuming that the human and machine accomplish a task jointly and interactively while learning with and from each other. Second, preference learning should be uncertainty-aware, i.e., the machine should be aware of its uncertainty about the human’s preferences. This is an important prerequisite for reliable and responsible decision making, notably to avoid inappropriate actions that may lead to safety-critical situations, and to control exploration in a targeted and cautious manner.